TECHNOLOGY NEWS

Distributed Sensor Networks for Large Volume Surveillance

Sensor networks constitute of a number of heterogeneous sensors including stationary and mobile deployments and typically the task is to sense and gather information over the domain of interest. Depending on the application that the sensor network is being employed for, the requisite information to be gathered ranges over observable dynamic and static events, objects and over a prolonged period of time. The increased functionality of modern sensors, networkability and the presence of onboard processing capabilities of the sensor platforms compound the operational complexity of manually managing large sensor networks. Therefore in order to efficiently use of a number of such sensor platforms for network centric tasks, advanced information processing and decision support tools are necessary. Some of the primary applications of sensor networks include wireless sensor networks [1], large volume surveillance [2], transportation systems [3], communication ad-hoc networks [4], health-care [5], military tactical operations [6] and home automation [7]. For large volume surveillance, it refers to monitoring situations of threat and day-to-day surveillance occurring over vast areas such as oceans, coastal borders and arctic regions.

Autonomy in such surveillance sensor networks can be justified through a number of reasons. First, measurements or tracks from a number of heterogeneous sensors are fed back to a central unit, and hence information fusion plays an important role in overall situational awareness. Automating this process in a tangible manner will not only help fasten the information processing at the sensor level but will also provide the necessary framework to emulate human like reasoning. Second, timely data access from different sources is a concern that impacts our overall awareness about the region of interest. Without timeliness of access, the data might be dropped or thrown away and considered inappropriate for situation assessment and monitoring. Third, inter-node communication can only be limited in terms of bandwidth and energy. Coordinated communication is not applicable due to the complexity of the network. If the inter-node communication is long range and single hop, energy consumption and interference from other communicating nodes will be a serious issue. On the other hand, if the multiple hop short range communication is assumed, changing network topology due to node mobility has to be accounted for. Therefore, fusion algorithms which consider the limitation of the communication channel (packet drop, fading etc.) need to be developed. Since, large scale synchronization of network may not be possible due to hardware limitations, non coherent fusion methods are found more appropriate.

In this article we describe an approach to distributed information processing and goal-driven situation assessment for surveillance in large areas (such as the coastlines or the arctic regions). There are two main tasks involved; low level information fusion and inference for situation assessment. The first task involves rapid data association and fusion to facilitate dynamic update and exchange of data as new sensory information is collected. The second task entails reasoning and situation assessment based on the fused information. This task would establish a case referring to the up to date knowledge about the targets and/or the area of interest. We are developing a sensor network for surveillance comprising of radars, EO/IR and SAR imagers and AIS. Together these sensors provide position, velocity, shape and size estimates for the objects within scope. For coastal surveillance, six types of targets i.e. fishing boat, pleasure craft, cargo ship, tanker ship, military ship and Unsure have been adopted.

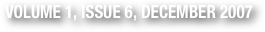

Information gathered by various nodes in the network is combined at individual platforms and stored inside global situation evidence. Global situation evidence is made up of four elements [8] viz., the time stamp, proposition (velocity, position, etc), set of proposition qualifiers and situation evidence objects. Information fusion is implemented at each node. Figure 1 depicts the individual node structure and its information processing modules.

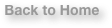

Information gathered by various nodes in the network is combined at individual platforms and stored inside global situation evidence. Global situation evidence is made up of four elements [8] viz., the time stamp, proposition (velocity, position, etc), set of proposition qualifiers and situation evidence objects. Information fusion is implemented at each node. Figure 1 depicts the individual node structure and its information processing modules. Local measurements from sensors at each node are first associated with the appropriate target based on a Munkres minimum cost assignment solution where the statistical distance is regarded as the cost associated with a given pairing and is given as: cij = eijT S eij, where eij is the difference between the measured and predicted values of the sensor measurement at every time step and S is the covariance of the eij. Data association and fusion are performed in a sequential manner [9] as depicted in Figure 2.

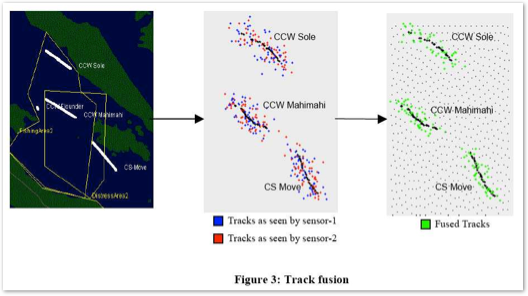

Local measurements from sensors at each node are first associated with the appropriate target based on a Munkres minimum cost assignment solution where the statistical distance is regarded as the cost associated with a given pairing and is given as: cij = eijT S eij, where eij is the difference between the measured and predicted values of the sensor measurement at every time step and S is the covariance of the eij. Data association and fusion are performed in a sequential manner [9] as depicted in Figure 2. Covariance Intersection [10] based fusion accounts for possible but unknown correlation between multiple measurements. For example, sensors mounted on the same platform will reflect a similar noise pattern in the sensor measurements, although there is some correlation, quantifying it accurately may not be possible. The mean s̅ and the covariance Pss of the fused estimate may be determined through a convex combination of the individual sensor covariance and may be given as Pss–1 = wPmm–1 + (1 – w)Pnn–1, and hence s̅ = Pss [ wPmm–1m̅+ (1 – w)Pnn –1n̅ ], where w∈[0 1] and is chosen in order to minimize some cost function (typically the trace of Pss); Pmm and Pnn are the approximated error correlations. Figure 3 shows the results of sensor level track fusion based on the techniques described above.

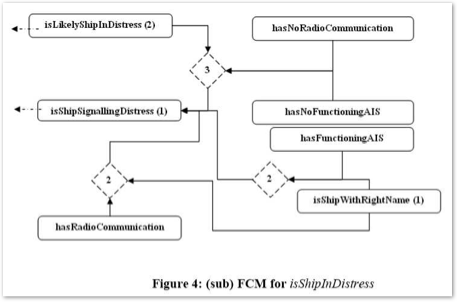

Covariance Intersection [10] based fusion accounts for possible but unknown correlation between multiple measurements. For example, sensors mounted on the same platform will reflect a similar noise pattern in the sensor measurements, although there is some correlation, quantifying it accurately may not be possible. The mean s̅ and the covariance Pss of the fused estimate may be determined through a convex combination of the individual sensor covariance and may be given as Pss–1 = wPmm–1 + (1 – w)Pnn–1, and hence s̅ = Pss [ wPmm–1m̅+ (1 – w)Pnn –1n̅ ], where w∈[0 1] and is chosen in order to minimize some cost function (typically the trace of Pss); Pmm and Pnn are the approximated error correlations. Figure 3 shows the results of sensor level track fusion based on the techniques described above. The fused tracks are then stored into global situation evidence. The reasoning module continuously assesses the gathered situation evidence to establish situations of interest. The field rules for situation assessment used by military personal were used as the basis to develop a fuzzy cognitive map (FCM) representation. An FCM is a collection of nodes and connecting arcs to represent the system states and interacting causality respectively. Here, the FCM is used as a multi-level deduction framework for backward reasoning to identify system goals. In essence, once the specific surveillance objective or goal is set, the FCM begins to degenerate it into sub-goals until sensor information based decisions can be made. A mathematical formalism is used to convert the probabilistic confidence associated with sensor information into fuzzy numbers, thereby accommodating the uncertainty introduced due to conflicting data or improper fusion. Based on predefined membership functions, situation evidence is mapped to a respective fuzzy number, which the FCM uses for inference. Figure 4 displays the sub-graph for a goal isShipInDistress. The rectangular nodes represent sub-goals, whereas the dashed nodes represent conditional causality.

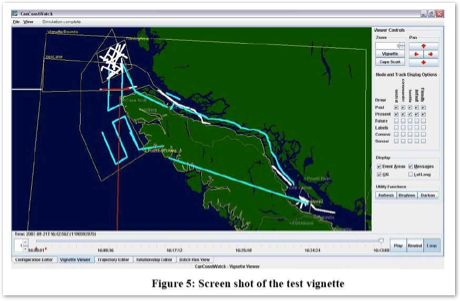

The fused tracks are then stored into global situation evidence. The reasoning module continuously assesses the gathered situation evidence to establish situations of interest. The field rules for situation assessment used by military personal were used as the basis to develop a fuzzy cognitive map (FCM) representation. An FCM is a collection of nodes and connecting arcs to represent the system states and interacting causality respectively. Here, the FCM is used as a multi-level deduction framework for backward reasoning to identify system goals. In essence, once the specific surveillance objective or goal is set, the FCM begins to degenerate it into sub-goals until sensor information based decisions can be made. A mathematical formalism is used to convert the probabilistic confidence associated with sensor information into fuzzy numbers, thereby accommodating the uncertainty introduced due to conflicting data or improper fusion. Based on predefined membership functions, situation evidence is mapped to a respective fuzzy number, which the FCM uses for inference. Figure 4 displays the sub-graph for a goal isShipInDistress. The rectangular nodes represent sub-goals, whereas the dashed nodes represent conditional causality. Figure 5 depicts the screen shot of the user interface. As a proof of concept this system can be used to evaluate the performance of the constituent methods and approaches for surveillance applications under various scenarios and conditions. Future research in sensor networks should indulge in developing reliable communication paradigms for such network enabled tasks, efficient energy management and distributed control architectures. With regards to the network in sensor networks, aspects related to autonomic topology, scalability and self-healing like capabilities should be investigated.

Figure 5 depicts the screen shot of the user interface. As a proof of concept this system can be used to evaluate the performance of the constituent methods and approaches for surveillance applications under various scenarios and conditions. Future research in sensor networks should indulge in developing reliable communication paradigms for such network enabled tasks, efficient energy management and distributed control architectures. With regards to the network in sensor networks, aspects related to autonomic topology, scalability and self-healing like capabilities should be investigated.References:

[1] K. Romer and F. Mattern, “The design space of wireless sensor networks”, IEEE Wireless Communications, vol.11, no. 6, pp. 54-61, 2004.

[2] P. Valin, et al., “Testbed for large volume surveillance through distributed fusion and resource management,” Proc. of the SPIE (Defense Transformation and Net-Centric Systems), vol. 6578, pp. 657803-657814, 2007.

[3] S. Tang, F.Y. Wang, and Q. Miao, “ITSC 05: current issues and research trends,” IEEE Intelligent Systems, vol. 21, no. 2, pp. 96-102, 2006.

[4] J.G. Andrews, S. Weber, and M. Haenggi, “Ad hoc networks: To spread or not to spread,” IEEE Communications Magazine, vol. 45, no. 12, pp. 84-91, 2007.

[5] D. Trossen and D. Pavel, “Sensor networks, wearable computing, and healthcare applications,” IEEE Pervasive Computing, vol. 6, no. 2, pp. 58-61, 2007.

[6] C.Y. Chong and S.P. Kumar, “Sensor networks: evolution, opportunities, and challenges,” Proceedings of IEEE, vol. 91, no. 8, pp. 1247-1256, 2003.

[7] S.H. Hong, B. Kim, and D.S. Eom, “A base-station centric data gathering routing protocol in sensor networks useful in home automation applications,” IEEE Trans. on Consumer Electronics, vol. 53, no. 3, pp. 945-951, 2007.

[8] H. Wehn, et al., “A distributed information fusion testbed for coastal surveillance,” Proc. of Intl. Conf. on Information Fusion, Quebec City, Quebec, Canada, 9-12 July 2007.

[9] Z. Li, H. Leung, P. Valin, and H. Wehn, “High level data fusion system for CanCoastWatch,” Proc. of Intl. Conf. on Information Fusion, Quebec City, Quebec, Canada, 9-12 July 2007.

[10] S. Julier and J.K. Uhlmann, “A non-divergent estimation algorithm in the presence of unknown correlations,” Proc. of American Control Conf., vol. 4, pp. 2369-2373, 1997

Henry Leung and Sandeep Chandana, Department of Electrical and Computer Engineering, University of Calgary, Canada (Email: leungh@ucalgary.ca)

This article was recommended by Michael Tse.